We dream of a future where technology eradicates road accidents. In this vision, fully autonomous, self-driving cars will communicate with each other in a seamless, high-speed digital ballet, and the concept of human error will become a quaint relic of a bygone, more dangerous era. This utopian vision is a powerful and necessary motivator for innovation, but it dangerously masks a more complex and perilous transition period that will likely last for decades.

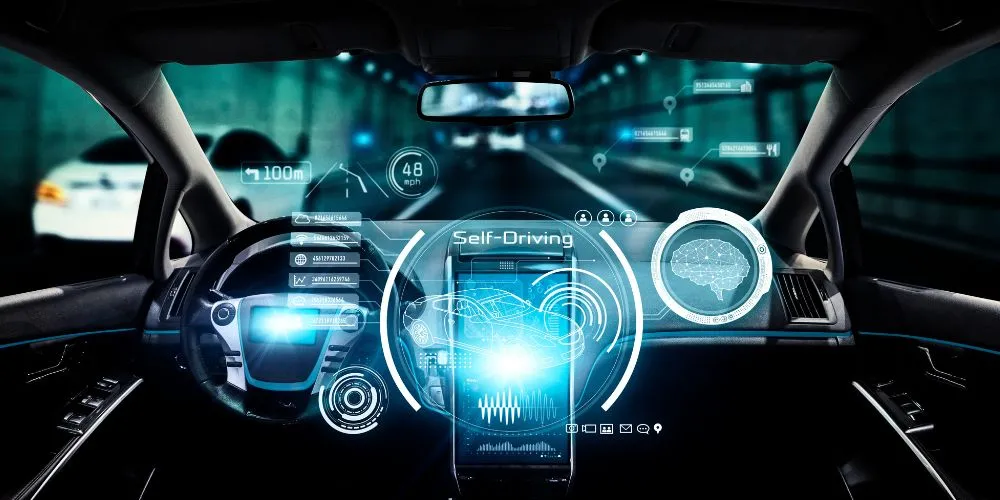

For the foreseeable future, our roads will be a volatile and unpredictable mix of legacy human-driven cars, semi-autonomous vehicles with varying levels of capability, and eventually, the first generation of fully autonomous vehicles. This hybrid environment presents entirely new and untested risks that we are ill-prepared to manage.

How does a human driver, accustomed to making eye contact and reading body language, anticipate the purely logical, and perhaps jarringly abrupt, actions of a machine? Conversely, how does an AI, programmed with strict adherence to rules, react to the irrational, emotional, and often unpredictable behavior of a human driver who might be angry, distracted, or impaired?

We are entering what could be the most perilous phase in automotive history, a period where the old rules of the road and the new lines of code collide with potentially fatal consequences. The focus of future technology should not be limited to the far-off, idealized goal of full autonomy. Still, on the messy, intermediate reality we will inhabit for years to come.

We urgently need technology that enhances human awareness and facilitates clear communication between these different classes of vehicles, not just systems that encourage us to disengage. The road to a safer future is not a sudden, clean leap, but a long and carefully navigated journey through a complex and evolving technological landscape.